Why regulating facial recognition technology is so problematic

- Written by Liz Campbell, Francince McNiff Professor of criminal jurisprudence, Monash University

The use of automated facial recognition technology (FRT) is becoming more commonplace globally, in particular in China, the UK and now Australia.

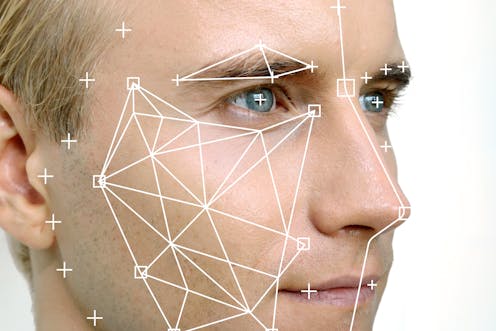

FRT means we can identify individuals based on an analysis of their geometric facial features, drawing a comparison between an algorithm created from the captured image and one already stored, such as a driving licence, custody image or social media account.

FRT has numerous private and public-sector applications when identity verification is needed. These include accessing a secure area, unlocking a mobile device or boarding a plane. It’s also able to recognise people’s moods, reactions and, apparently, sexuality.

And it’s more valuable for policing than ordinary CCTV cameras as it can identify individuals in real time and link them to stored images. This is of immense value to the state in criminal investigations, counter-terrorism operations and border control.

How it impinges on rights to privacy

But the deployment of FRT, which is already being used by the AFP and state police forces and by private companies elsewhere (airports, sporting venues, banks and shopping centres), is under-regulated and based on questionable algorithms that are not publicly transparent.

This technology has major implications for people’s privacy rights, and its use can worsen existing biases and discrimination in policing practices.

The use of FRT impinges on privacy rights by creating an algorithm of unique personal characteristics. This in turn reduces people’s characteristics to data and enables their monitoring and surveillance. These data and images will also be stored for a certain period of time, opening up the possibility of hacking or defrauding.

Read more: Close up: the government's facial recognition plan could reveal more than just your identity

In addition, FRT can expose people to potential discrimination in two ways. First, state agencies may misuse the technologies in relation to certain demographic groups, whether intentionally or otherwise.

And secondly, research indicates that ethnic minorities, people of colour and women are misidentified by FRT at higher rates than the rest of the population. This inaccuracy may lead to members of certain groups being subjected to heavy-handed policing or security measures and their data being retained inappropriately.

This is particularly relevant in relation to communities that are already targeted disproportionately in Australia.

What lawmakers are trying to do

Parliament has drafted a bill in an effort to regulate this space, but it leaves a lot to be desired. The bill is currently in second reading; no date has been set for a vote.

If passed, the bill would enable the exchange of identity information between the Commonwealth government, state and territory governments and “non-government entities” (which haven’t been specified) through the creation of a central hub called “The Capability”, as well as the National Driver Licence Facial Recognition Solution, a database of information contained in government identity documents, such as driver licences.

The bill would also authorise the Department of Home Affairs to collect, use and share identification information in relation to a range of activities, such as fraud prevention, law enforcement, community safety, and road safety.

It would be far preferable for such legislation to permit identity-sharing only for a limited range of serious offences. As written, this bill relates to people who have not been convicted of any criminal offences – and, in fact, need not be suspected of any offence.

Read more: Police mugshots: millions of citizens' faces are now digitised and searchable – but the tech is poor

Also, the Australian people have not consented to their data being shared in this way. Such sharing would have a disproportionate effect on our right to privacy.

Importantly, the breach of the right to privacy is compounded by the fact that the bill would permit the private sector to access the identity matching services. Though the private sector already uses image comparison and verification technologies to some degree, such as by banks seeking to detect money laundering for instance, the bill would extend this. The bill does not provide sufficient safeguards or penalties for private entities if they use the hub or people’s data inappropriately.

The prevalence of false-positives in identity matches

In a practical sense, there are also concerns about the reliability and accuracy of FRT, which is developing rapidly but is not without major problems. Whether these problems are just bugs or a feature of FRT remains to be seen.

Experience from the UK illustrates these issues clearly. South Wales Police, the national British lead on facial recognition technology, used the system developed by a private Australian company called Neoface at 18 public gatherings between May 2017 and March 2018. It found that 91% of matches, or 2,451 instances, incorrectly identified innocent members of the public as being on a watchlist. Manual oversight of the program revealed the matches to be false-positives.

Read more: Facial recognition is increasingly common, but how does it work?

The London Metropolitan Police is also running an FRT pilot. It recorded somewhat better figures, but still had a false-negative identification rate of 30% at the Notting Hill Carnival and 22% at Remembrance Day, both in 2017.

The central role of private companies in the development of this technology is also important to flag. FRT algorithms are patented, and there is no publicly available indication of the measurement or standard that represents an identity “match”.

Legislating in a fast-moving, technologically driven space is not an enviable task. There is some benefit to a degree of flexibility in legal definitions and rules so the law doesn’t become static and redundant too soon. But even the most generous interpretation of the bill would admit that it is a flawed way of regulating the use of a powerful and problematic technology that is here to stay, like it or not.

Authors: Liz Campbell, Francince McNiff Professor of criminal jurisprudence, Monash University