Social media has huge problems with free speech and moderation. Could decentralised platforms fix this?

- Written by Chris Berg, Principal Research Fellow and Co-Director, RMIT Blockchain Innovation Hub, RMIT University

Over the past few months, Twitter took down the account of the then-President of the United States and Facebook temporarily stopped users from sharing Australian media content. This begs the question: do social media platforms wield too much power?

Whatever your personal view, a variety of “decentralised” social media networks now promise to be the custodians of free-spoken, censorship-resistant and crowd-curated content, free of corporate and political interference.

But do they live up to this promise?

Read more: Trump’s Twitter tantrum may wreck the internet

Cooperatively governed platforms

In “decentralised” social media networks, control is actively shared across many servers and users, rather than a single corporate entity such as Google or Facebook.

This can make a network more resilient, as there is no central point of failure. But it also means no single arbiter is in charge of moderating content or banning problematic users.

Some of the most prominent decentralised systems use blockchain (often associated with Bitcoin currency). A blockchain system is a kind of distributed online ledger hosted and updated by thousands of computers and servers around the world.

And all of these plugged-in entities must agree on the contents of the ledger. Thus, it’s almost impossible for any single node in the network to meddle with the ledger without the updates being rejected.

A blockchain is a type of ledger or database which is ‘immutable’, meaning its data can’t be altered. As new data comes in it is entered into a new block, which is then locked into an existing chain of blocks.

Shutterstock

A blockchain is a type of ledger or database which is ‘immutable’, meaning its data can’t be altered. As new data comes in it is entered into a new block, which is then locked into an existing chain of blocks.

Shutterstock

Gathering ‘Steem’

One of the most famous blockchain social media networks is Steemit, a decentralised application that runs on the Steem blockchain.

Because the Steem blockchain has its own cryptocurrency, popular posters can be rewarded by readers through micropayments. Once content is posted on the Steem blockchain, it can never be removed.

Not all decentralised social media networks are built on blockchains, however. The Fediverse is an ecosystem of many servers that are independently owned, but which can communicate with one another and share data.

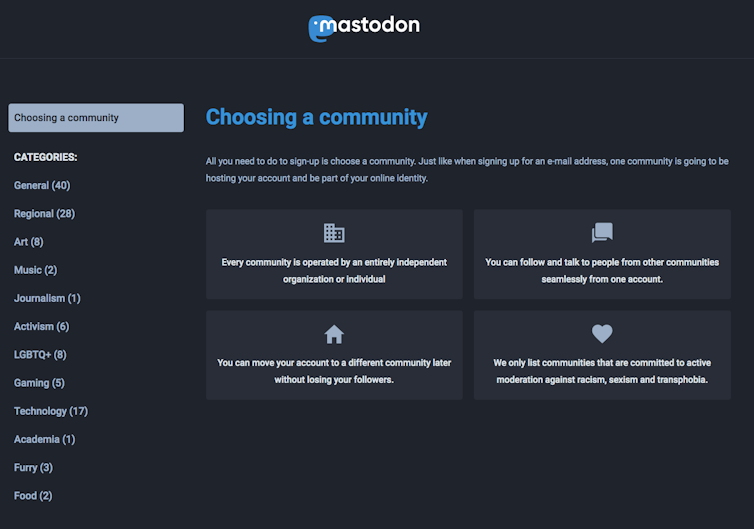

Mastodon is the most popular part of the Fediverse. Currently with close to three million users across more than 3,000 servers, this open-source platform is made up of a network of communities, similar to Reddit or Tumbler.

Users can create their own “instances” of Mastodon — with many separate instances forming the wider network — and share content by posting 500-character-limit “toots” (yes, toots). Each instance is privately operated and moderated, but its users can still communicate with other servers if they want to.

What do we gain?

A lot of concern around social media involves what content is being monetised and who benefits. Decentralised platforms often seek to shift the point of monetisation.

Platforms such as Steemit, Minds and DTube (another platform built on the Steem social blockchain) claim to flip this relationship by rewarding users when their content is shared.

Another purported benefit of decentralised social media is freedom of speech, as there’s no central point of censorship. In fact, many decentralised networks in recent years have been developed in response to moderation practices.

Read more: Parler: what you need to know about the 'free speech' Twitter alternative

But even the most pro-free-speech platforms face challenges. There are always malicious people, such as violent extremists, terrorists and child pornographers, who should not be allowed to post at will. So in practice, every decentralised network requires some sort of moderation.

Mastodon provides a set of guidelines for user conduct and has moderators within particular servers (or communities). They have the power to disable, silence or suspend user access and even to apply server-wide moderation.

As such, each server sets its own rules. However, if a server is “misbehaving”, the entire server can be put under a domain block, with varying degrees of severity. Mastodon publicly lists the moderated servers and the reason for restriction, such as spreading conspiracy theories or hate speech.

Mastadon’s communities sign-up page says the platform is ‘committed to active moderation against racism, sexism and transphobia’.

Screenshot/Mastadon

Mastadon’s communities sign-up page says the platform is ‘committed to active moderation against racism, sexism and transphobia’.

Screenshot/Mastadon

Some systems are harder to moderate. Blockchain-based social network Minds claims to base its content policy on the First Amendment of the US constitution. The platform attracted controversy for hosting neo-Nazi groups.

Users who violate a rule receive a “strike”. Where the violation relates to “not safe for work” (NSFW) content, three strikes may result in the user being tagged under a NSFW filter. If this happens, other users must opt in to view the NSFW content, for “total control” of their feed.

Minds’s content policy states NSFW content excludes posts of an illegal nature. These result in an immediate user ban and removal of the content. If a user wants to appeal a decision, the verdict comes from a randomly-selected jury of users.

Even blockchain-based social media networks have content moderation systems. For example, Peepeth has a code of conduct adapted from a speech by Vietnamese Thiền Buddhist monk and peace activist Thích Nhất Hạnh.

“Peeps” falling afoul of the code are removed from the main feed accessible from the Peepeth website. But since all content is recorded on the blockchain, it continues to be accessible to those with the technical know-how to retrieve it.

Steemit will also delete illegal or harmful content from its user-accessible feed, but the content remains on the Steem blockchain indefinitely.

Read more: Reddit tackles 'revenge porn' and celebrity nudes

The search for open and safe platforms continues

While some decentralised platforms may claim to offer a free for all, the reality of using them shows us some level of moderation is both inevitable and necessary for even the most censorship-resistant networks. There are a host of moral and legal obligations which are unavoidable.

Traditional platforms including Twitter and Facebook rely on the moral responsibility of a central authority. At the same time, they are the target of political and social pressure.

Decentralised platforms have had to come up with more complex, and in some ways less satisfying, moderation techniques. But despite being innovative, they don’t really resolve the tension between moderating those who wish to cause harm and maximising free speech.

Authors: Chris Berg, Principal Research Fellow and Co-Director, RMIT Blockchain Innovation Hub, RMIT University