Aristotle and the chatbot: how ancient rules of logic could make artificial intelligence more human

- Written by David Ireland, Research Scientist at the Australian E-Health Research Centre., CSIRO

Many attempts to develop artificial intelligence are powered by powerful systems of mathematical logic. They tend to produce results that make logical sense to a computer program — but the result is not very human.

In our work building therapy chatbots, we have found using a different kind of logic — one first formalised by the Greek philosopher Aristotle more than 2,000 years ago — can produce results that are more fallible, but also much more like real people.

Read more: The future of chatbots is more than just small-talk

The different kinds of logic

The underpinning science of our chatbots is formal logic. Modern formal logic has its basis in mathematics — but that wasn’t always the case.

The discovery and formalisation of logic is attributed to Aristotle (384-322 BC) in his collected works, the Organon (or “instrument”).

Here he documented the first principle of reaching a conclusion from a set of premises. This would be later called inference, guided by rules known as syllogisms.

Since the 20th century, the field of logic has moved away from Aristotle’s approach towards systems that use predicate and propositional logic. These types of logic have been developed by mathematicians for mathematical applications; hence they are referred to as mathematical logics. Their reasoning is required to be infallible.

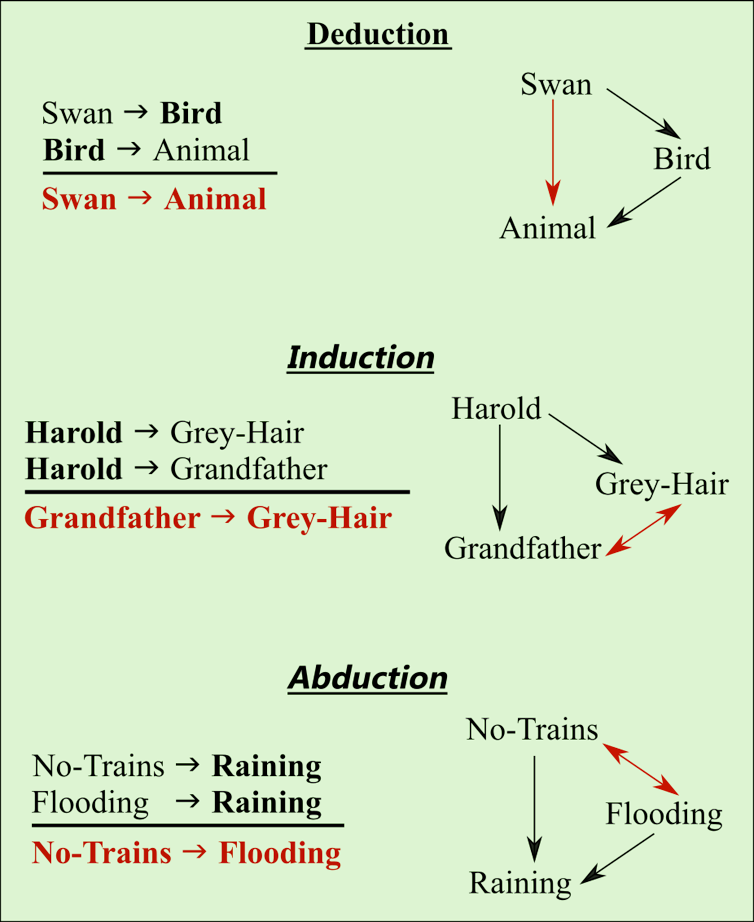

Human reasoning, on the other hand, is not always infallible. We mainly reason via deduction, induction and abduction.

You can think of deduction as using generalised rules to reason about a specific example, while induction and abduction involve looking at a collection of examples and trying to work out the rules that explain them.

While deduction tends to be most accurate, induction and abduction are less reliable. These are complex processes not easily programmed into machines.

Arguably, induction and abduction are what separate human intelligence, which is vast and general but often inaccurate, from the narrow yet increasingly accurate intelligence of machines.

Chat logic

We have found that using mathematical logic makes our chatbots less able to have meaningful interactions with humans.

For example, a single human utterance often makes little sense without a large context of what linguists call entailments, presuppositions and implicatures.

While our brains factor in this context automatically, machines must use some form of equivalent logic.

Artificial general intelligence

One school of thought suggests parts of Aristotle’s logic, nowadays referred to as term logic, and his rules of inference, could form core components of an artificial general intelligence (AGI).

The OpenCog and OpenNars are prominent AGI research platforms with term logic at the core. At present these platforms are capable of general-purpose reasoning for potential applications in health and robotics.

A robot using the OpenCog system in 2016.Term logic

Term logic is composed of basic units of meaning, which are linked by what linguists call a “copula”. To write “a bird is an animal” in term logic, we could use the copula denoted “->” which intuitively means “is a special kind of”, like this:

Bird -> Animal

This is a very simple example, but more complex and expressive statements are also possible.

Term logic and syllogisms also avoid some of the logical paradoxes that often occur when fitting natural language into a logical framework.

For example, in most systems of formal logic, a nonsense statement like “if the moon is made of cheese, the world is coming to an end” counts as a valid argument. (This is called the paradox of material implication, and occurs because if often has very different meanings in natural language and in formal logic.)

Aristotle, however, stated syllogisms are what must follow from two independent premises that share one (and only one) term. This rule lets us dismiss the argument above, as the two pieces of the argument (“the moon is made of cheese” and “the world is coming to an end”) don’t share a term.

Fallible reasoning

AGI researchers have extended Aristotle’s syllogisms by allowing conclusions that may be true with a degree of uncertainty (fallible reasoning) as well as those that must be true (like those from deductive reasoning). Term logic readily supports these forms of reasoning.

Examples of different forms of reasoning that term logic aptly supports. Conclusions are given in red. Deduction is infallible while induction and abduction are fallible.

Examples of different forms of reasoning that term logic aptly supports. Conclusions are given in red. Deduction is infallible while induction and abduction are fallible.

Beliefs and truths

Now that we can derive conclusions that may be true, we need to identify these as beliefs with a corresponding truth value.

How to determine the truth value of a belief is where some AGI researchers differ. The OpenNars project approach is most similar to the human belief system, where it counts the number of independent pieces of evidence for and against a belief to to determine how much confidence to place in it.

Virtual AI companions

So how can Aristotle’s voice be heard in our chatbot technology?

At the CSIRO Australian e-Health Research Centre we are developing chatbots to help people better manage their health and wellbeing.

Read more: To stop the machines taking over we need to think about fuzzy logic

We have started to use AGI in our chatbot technology for those with communication challenges and who benefit from technology interactions. Our version is mostly inspired from the OpenNars platform but infused with other components we found useful.

Rather than just computing a response from a sequence of words, responses from the chatbot are derived from the relationships between billions of terms. Beliefs with low confidence can be sent back to the user (for example, a person asking a health chatbot about symptoms) as questions.

In the future we think this will allow for more engaging, deeper and natural interactions between humans and machine. The beliefs and “personality” of the chatbot will become tailored to the user.

Aristotle’s 2,000-year-old logic has had a profound influence on Western civilisation. A revamp of his ancient works could very well shift us into a new frontier of human-computer interaction.

Authors: David Ireland, Research Scientist at the Australian E-Health Research Centre., CSIRO