What are tech companies doing about ethical use of data? Not much

- Written by James Arvanitakis, Professor in Cultural and Social Analysis, Western Sydney University

Our relationship with tech companies has changed significantly over the past 18 months. Ongoing data breaches, and the revelations surrounding the Cambridge Analytica scandal, have raised concerns about who owns our data, and how it is being used and shared.

Tech companies have vowed to do better. Following his grilling by both the US Congress and the EU Parliament, Facebook CEO, Mark Zuckerberg, said Facebook will change the way it shares data with third party suppliers. There is some evidence that this is occurring, particularly with advertisers.

But have tech companies really changed their ways? After all, data is now a primary asset in the modern economy.

To find whether there’s been a significant realignment between community expectations and corporate behaviour, we analysed the data ethics principles and initiatives that various global organisations have committed since the various scandals broke.

What we found is concerning. Some of the largest organisations have not demonstrably altered practices, instead signing up to ethics initiatives that are neither enforced nor enforceable.

Read more: Big Data is useful, but we need to protect your privacy too

How we tracked this information

Before discussing our findings, some points of clarification.

Firstly, the issues of data, artificial intelligence (AI), machine learning and algorithms are difficult to draw apart, and their scope is contested. In fact, for most of these organisations, the concepts are lumped together, while for researchers and policy makers they present distinctly different challenges.

For example, machine learning, while a branch of AI, is about building machines to learn on their own without supervision. As such, policy makers must ensure that the machine learning algorithms are free from bias and take into consideration various social and economic issues, rather than treating everyone the same.

Secondly, the policies, statements and guidelines of the companies we looked at are not centrally located, consistently presented or simple to decipher.

Accounting for the lack of consistent approach to data ethics taken by technology companies, our method was to survey visible steps undertaken, and to look at the broad ethical principles embraced.

Five broad categories of data ethics

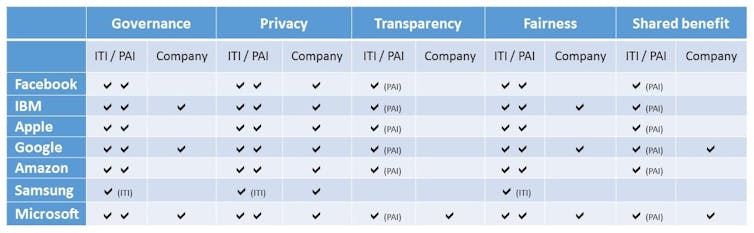

Some companies, such as Microsoft, IBM, and Google, have published their own AI ethical principles.

More companies, including Facebook and Amazon, have opted to keep an arm’s length approach to ethics by joining consortiums, such as Partnership on AI (PAI) and the Information and Technology Industry Council (ITI). These two consortiums have published statements containing ethical principles. The principles are voluntary, and have no reporting requirements, objective standards or oversight.

Read more: Big Data is useful, but we need to protect your privacy too

We examined the content of the published ethical guidelines of these companies and consortiums, and found the principles fell into five broad categories.

Privacy: privacy is widely acknowledged as an area of importance, highlighting that the focus for most of these organisations is a traditional consumer/supplier relationship. That is, the data provided by the consumers is now owned by the company, who will use this data, but respect confidentiality

governance: these principles are about accountability in data management, ensuring quality and accuracy of data, and the ethical application of algorithms. The focus here is on the internal processes that should be followed

fairness: fairness means using data and algorithms in a way that respects the person behind the data. That means taking safety into consideration, and recognising the impact the use of data can have on people’s lives. This includes a recognition of how algorithms relying on historical data or flawed programming can discriminate against marginalised communities

shared benefit: this refers to the idea that data is owned by those that produce it and, as such, there should be joint control of the data, as well as shared benefits. We noted a lack of consensus or intention to adhere to this category

transparency: it is here that a more nuanced understanding of data ownership begins to emerge. Transparency essentially refers to being open about the way data is collected and used, as well as eschewing unnecessary data collection. Given the commercial imperative of companies to protect confidential research and development, it’s not surprising this principle is only acknowledged by a handful of players.

Initiatives big tech companies have signed up to in particular categories of data ethics.

Author provided

Initiatives big tech companies have signed up to in particular categories of data ethics.

Author provided

Fairness and transparency is important

Our research suggests conversations about data ethics are largely focused on privacy and governance. But these principles are the minimum expected in a legal framework. If anything, the scandals of the past have shown us this is not enough.

Facebook is notable as company keeping an arm’s length approach to ethics. It’s a member of Partnership on AI and Information and Technology Industry Council, but has eschewed publication of its own data ethics principles. And while there have been rumblings about a so-called “Fairness Flow” machine learning bias detector, and rumours of an ethics team at Facebook, details for either of these developments are sketchy.

Meanwhile, the extent to which Partnership on AI and Information and Technology Industry Council influence the behaviour of member companies is highly questionable. The Partnership on AI, which has more than 70 members, was formed in 2016, but it has yet to demonstrate any tangible outcomes beyond the publication of key tenets.

Read more: We need to talk about the data we give freely of ourselves online and why it's useful

Better regulation is required

For tech companies, there may be a trade-off between treating data ethically and how much money they can make from that data. There is also a lack of consensus between companies about what the correct ethical approach looks like. So, in order to protect the public, external guidance and oversight is needed.

Unfortunately, the government has currently kept its focus in the new Australian Government Data Sharing and Release Legislation on privacy – a principle that’s covered in legislation elsewhere.

The data related events of the last few years have confirmed we need a greater focus on data as a citizen right, not just a consumer right. As such, we need greater focus on fairness, transparency and shared benefit – all areas which are currently being neglected by companies and government alike.

The author would like to acknowledge the significant contribution made to this article by Laura Hill, a student in the Western Sydney University Bachelor of Law graduate entry program.

Authors: James Arvanitakis, Professor in Cultural and Social Analysis, Western Sydney University

Read more http://theconversation.com/what-are-tech-companies-doing-about-ethical-use-of-data-not-much-104845