what matters in Australian culture

- Written by Julian Meyrick, Professor of Creative Arts, Flinders University

This is an edited extract from the new book What Matters? Talking Value in Australian Culture. It is a longer read, at just under 2500 words.

The demand for certainty is one which is natural to man, but is nevertheless an intellectual vice … To endure uncertainty is difficult, but so are most of the other virtues. - Bertrand Russell

When did culture become a number? When did the books, paintings, poems, plays, songs, films, games, art installations, clothes, and all the myriad objects that fill our lives and which we consider cultural, become a matter of statistical measurement?

When did the value of culture become solely a matter of the quantifiable benefits it provides, and the latter become subject to input–output analysis in what government budgets refer to as “the cultural function”? When did experience become data?

Perhaps a more important question is why did it happen, and why does it keep happening? Also, how does it happen? Culture is innate to being human. Thick books have been written describing culture’s myriad expressions and meanings. Culture has been around for as long as humanity itself. And the question of its value is not new.

But why are we answering it in the way that we are – by turning it into something to be scaled, measured and benchmarked? Who loses and who gains?

These are big questions of more than academic or Australian import. They are, indeed, much broader than arts and culture, as a recent crop of studies describing the unintended effects of the rise of “metric power” suggests.

How can data help assess the merit of a book?

How can data help assess the merit of a book?

Our core contention is that datafied modes of analysis are claiming authority over domains of human existence they have limited capacity for understanding. If you are researching an influenza epidemic, more data is better data.

If you are studying Australian film, more data is informative but not definitive because questions of artistic merit can only be judged. If you are assessing Christina Stead’s The Man Who Loved Children, massified data is close to useless.

At a time when even accountants are looking for a more compelling understanding of value, it is imperative that the arts – a domain where individual experience is central – resist the evangelical call of quantification and winnow its potential benefits from its real and deleterious risks.

Alienating language

What Matters? hopes to influence public debate about the value of culture, to encourage people to see their cultural experiences in that debate, and not feel some strange urge to speak the “language of government”. This can be extremely alienating.

Consider a 2008 Australian Bureau of Statistics paper, Towards Comparable Statistics for Cultural Heritage Organisations. It proposes “a list of Key Measures … balancing the priority of items across four cultural heritage domains with the feasibility of producing standard guidelines for collecting data”. The five Key Measures it puts forward – Attendance, Visitor Characteristics, Financial Resources, Human Resources and The Collection – subsume 18 Detailed Measures, with a list of Counting Rules for each.

This is a long way from browsing a library shelf, or walking through an exhibition. A long way from reading a book, contemplating the mystery of ancient artefacts, or librarians helping people navigate online genealogy portals.

Such language generates a detached world of arithmetical marks, and the sums and inferences considered legitimate to those marks. Where does the experience of going to a library or museum fit in? Not in ABS statistics, obviously, and no doubt the bureau would not think itself competent to pronounce on such “qualitative” matters. Who does then? And how do “qualitative” matters sit with quantitative enumeration?

Where does the experience of visiting a museum fit in? A visitor at the ‘Songlines: Tracking the Seven Sisters’ exhibition at the National Museum of Australia in February.

Lukas Cooch/AAP

Where does the experience of visiting a museum fit in? A visitor at the ‘Songlines: Tracking the Seven Sisters’ exhibition at the National Museum of Australia in February.

Lukas Cooch/AAP

A petri dish

The datafication of arts and culture is only a few decades old, so it is not an essential or inevitable element of their assessment. What happens when this is the major way we describe their place in our lives? In 2013, we began a university research project of moderate scope seeking to understand how quantitative and qualitative indicators align in government measures of culture. As we were in Adelaide, we made that city our focus. We called the project Laboratory Adelaide because we saw it as a case study with a rich cultural history and an active contemporary arts scene: a petri dish of just the right scale.

To get our research off the ground, we held a lunch for some of Adelaide’s cultural leaders. Over dessert, we asked them what they wanted us to achieve. The answer was instructive: a way to talk truthfully about what they do. They were, they said, unable to incorporate their real motivations and experiences into their reporting. Could we find a new, better way of communicating the actual value of arts and culture?

Visitors at ‘the obliteration room’, an artwork by Japanese artist Yayoi Kusama, at GOMA in 2015.

Dave Hunt/AAP

Visitors at ‘the obliteration room’, an artwork by Japanese artist Yayoi Kusama, at GOMA in 2015.

Dave Hunt/AAP

For the first year this seemed a simple enough goal. After all, these people were doing things the public had ready access to. Both state and local government in South Australia had a record of acknowledging the contribution of culture. They supported a range of cultural organisations and events – especially festivals, which are a big part of Adelaide’s civic life.

There was a sense that everyone already knew how important culture was to the state. But when it came to demonstrating its value, the words weren’t there. Our job was to fix that. As humanities scholars, we felt we were in a good position to do so. After all, didn’t we spend our lives talking about culture?

As the second year drew on, a note of uncertainty entered the project. By now we were starting to publish, and articulate in a series of articles, columns, notes, letters and emails, the dimensions of the problem as we saw it – the short time-scales governments deploy to evaluate outcomes, for example, which ignore culture’s longer-term contribution. Or the woolly use of language in policy documents, that makes the precise meaning of terms like “excellence” and “innovation” impossible to pin down.

These issues, and others, have serious assessment implications. But it goes beyond this, highlighting a basic misapprehension of culture by governments, not on a human level – politicians and policy makers, like the rest of us, read books, watch films and listen to music – but on the official level.

Did the data deliver?

Meanwhile, political events intervened and the Australian cultural sector exploded like a supernova. In 2015, the then federal Arts Minister, George Brandis, raided the budget of the federal arts agency, the Australia Council of the Arts, to set up his own, personally administered grant body. To say this came as a shock to arts practitioners would be a considerable understatement. The minister’s actions contradicted 30 years of cross-party consensus about how culture in Australia should be federally funded – via independent agencies – and rendered his support of the Council’s 2014–19 Strategic Plan a sham. The sector went into uproar. Where did the years of accumulated data on the demonstrable benefits of arts and culture figure in this fiery clash of ideologies?

The answer is that they didn’t. The numerical proofs of culture’s value (mainly economic value) that have been cascading through government consciousness since the 1970s were nowhere to be seen. For Laboratory Adelaide it confirmed a growing conviction: the problem of the value of culture is not a methodological one. It cannot be addressed by a new metric. Use of measurement indicators assumes a degree of background understanding that too often isn’t there at a policy level. The quantitative demonstrations of value we were trying to improve don’t make sense for culture. They flatten out its history, purpose and meaning.

This realisation put us at odds with expert views about the role of culture in post-industrial societies today. These views are typically upbeat about culture’s economic and urban “vibrancy”. Charles Landry’s “creative cities”, Richard Florida’s “creative class”, John Hartley and Terry Flew’s “creative industries” – the ideas of these authors, and others of similar ilk, are practical and positive (though Florida has recently retreated to a gloomier position).

The problem of the value of culture is not a methodological one.

Jeremy Ng/AAP

The problem of the value of culture is not a methodological one.

Jeremy Ng/AAP

The “creative industries” approach, for example, treats policy-making processes with a benign eye and admits no serious difficulties when it comes to proving the benefits culture provides. This chipper outlook is mirrored by swarms of local efforts around Australia today to develop bespoke systems of “cultural indicators”, each slightly different, yet each beholden to the same underlying assumption: that the value of culture can be numerically demonstrated.

By now we were participating in conferences, and consulting broadly among arts agencies and peak bodies. It sometimes seemed to us that everyone was looking for the perfect metric. We sat through presentation after presentation on quantitative approaches to culture’s value. At the end, hands would invariably go up and people say they were developing a “similar measurement model”.

Yet underneath the relentless optimism, we sensed a current of troubled preoccupation. It went by different names: “the intrinsic value of culture”, “the inherent value of culture”, “(the) cultural value (of culture)”. In this dry form it seems just another dimension of culture’s value, to be arraigned alongside the others: its economic value, its social value, its heritage value, etc. It is not.

It is code for all that is left out of measurement indices, which is to say our whole sense of culture, of what culture means. It seems obvious to say it, but in culture No Meaning = No Value. It may not be true of boots, bread and billiard balls. But it is absolutely true of symbolic goods like paintings, performances and books.

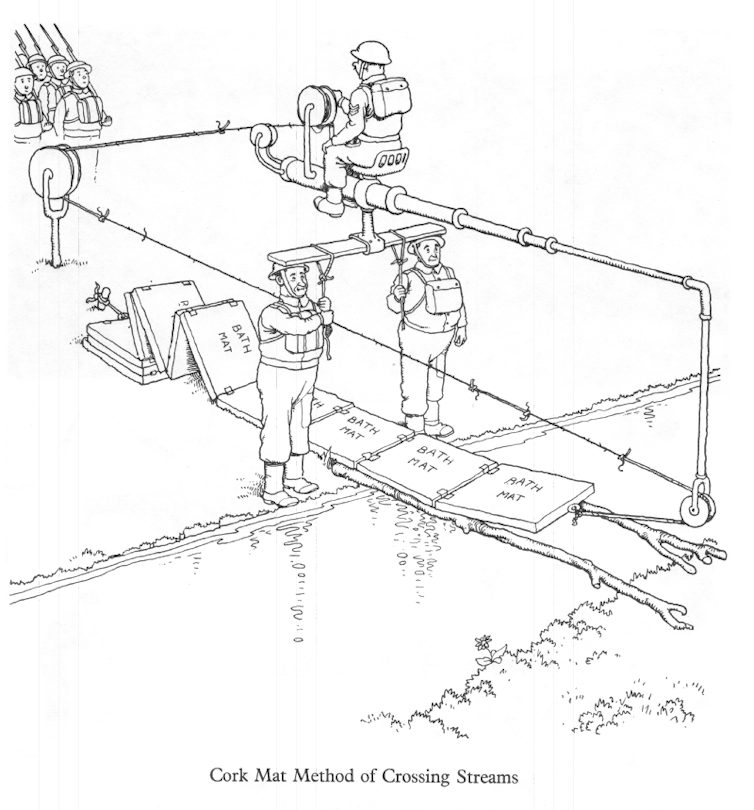

For thousands, possibly tens of thousands of years, culture has been supported through patronage. Whether it came from kings, popes or rich merchants, it came the same way: by someone seeing a particular cultural thing or activity and personally choosing to fund it. We have replaced this simple, if limited, support mechanism with distanced assessment processes of Heath-Robinson complexity.

William Heath Robinson: Cork Mat Method of Crossing Streams.

Wikimedia Commons

William Heath Robinson: Cork Mat Method of Crossing Streams.

Wikimedia Commons

These processes – involving submission forms, acquittal procedures, classification systems, priority lists – lose the immediacy of cultural experience. They are generalised and abstract, with cultural experience framed as a matter of personal taste, and opinions in relation to it “subjective”. Numbers then present as “objective”, whether or not they reflect the core elements of culture. Hence the desire to quantify as much of its assessment as possible.

Where to from here?

But like mirages of water on a hot road, the pursuit of numbers begets only the pursuit of more numbers. You might count, for example, the number of people going to a music concert (a measure of frequency). But did they have a good time (the value proposition)?

You might question some of them, and rank their answers (on a preference scale of one to five). But were they being truthful (response bias)? You might look for changes in their market choices thereafter (acquisition of consumption skills). But what of less obvious effects – on wellbeing, level of education, social participation, civic cohesion? More indices, more numbers. The search for certainty produces ever-more uncertain measures, each a further step away from the actual experience of culture. As the numbers get more rubbery and elaborate, people’s trust in them diminishes.

And it’s expensive. The statistical habit, like any habit, is one that requires significant investment. Is there a cost-benefit analysis to be done on our obsession with cost-benefit analysis? At what point do we stop trying to measure something and try to understand it better?

And it doesn’t help. The ever more elaborate datafication of culture hasn’t secured more money for arts and culture in Australia, or distributed the extant money better. If it assists an organisation to obtain an increase in public support in one grant round, there is no guarantee it will continue in the next.

This was the problem of culture’s value as it appeared to us in our third year, when we saw the full extent of what we had stumbled into. Beneath the inexorable pursuit of numbers-driven data lay a Dante-esque vortex of hope, despair, panic and bewilderment masked by the neutral patois of quantitative analysis – the bullshit language that Adelaide’s cultural leaders resented so deeply.

Joseph Anton Koch: fresco of Dante’s inferno.

Wikimedia Commons

Joseph Anton Koch: fresco of Dante’s inferno.

Wikimedia Commons

So where to from here? It is a question with profound implications, and not one Laboratory Adelaide can answer conclusively. However, we have identified some of the difficulties in valuing culture that governments and the public must meet head-on. They are not the only problems, but they are important ones:

The fact that assessment processes claim to measure value but leave out the human experience of culture, and turn it into a set of abstract, categorical traits.

The fact that assessment processes are preoccupied with short-term effects, ignore the longer-term trajectories of cultural projects, and have a sense of history that is flat and inorganic.

The fact that assessment processes use language and phrases empty of specific meaning for culture (i.e. bullshit), or valorise words that have no universally agreed definition (e.g. excellence or innovation).

The fact that people who experience culture are treated as consumers in a marketplace rather than members of a public, so public value (the underlying purpose of public investment) is inadequately addressed.

The fact that cultural organisations are regarded as scaled-up delivery mechanisms for policy outcomes, rather than as a serious and nuanced ecology worthy of study and support.

The fact that too often the value of culture is reduced to a dollar value, directly or indirectly.

Objectors might say that culture is considered in assessment processes, by way of peer review and ministerial oversight. They are right to some extent – but it is a declining extent.

Peer assessors and politicians retain an important role in how culture is evaluated in Australia today, both before and after it attracts government funding. But compared to the huge social outlay in gathering statistics and developing metrics, our almost religious faith in quantitative measurement, the place of judgement in valuing culture is a reluctant admission, an ageing relative inclined to embarrassing assertions, to be kept on a tight statistical leash. The human dimension of the problem of value is presumed to take care of itself. Only when it goes wrong, as it did under Senator Brandis, does it become a matter of strong attention.

What Matters? Talking Value in Australian Culture by Julian Meyrick, Robert Phiddian and Tully Barnett is published by Monash University Publishing and launched today.

Authors: Julian Meyrick, Professor of Creative Arts, Flinders University